There are a couple I have in mind. Like many techies, I am a huge fan of RSS for content distribution and XMPP for federated communication.

The really niche one I like is S-expressions as a data format and configuration in place of json, yaml, toml, etc.

I am a big fan of Plaintext formats, although I wish markdown had a few more features like tables.

I’ll give my usual contribution to RSS feed discourse, which is that, news flash! RSS feeds support video!

It drives me crazy when podcasters are like, “thanks for listening to our audio podcasts. We also have a video feed for our YouTube subscribers.” Just let me have the video in PocketCasts please!

I just wrote a YouTube scraper and exported to RSS and into my podcast client. Using YouTube any other way is masochism in comparison.

I feel you but i dont think podcasters point to youtube for video feeds because of a supposed limitation of RSS. They do it because of the storage and bandwidth costs of hosting video.

I wish there was a good open standard for task management or todo list.

I know there’s todo.txt, but it lacks features like dependent tasks, and overall the plain text format limits features and implementations.

Do you know if it allows dependent tasks?

Yes, but not all clients expose dependent tasks (which is sadly a common issue with open standards: they aren’t always properly implemented). I’m using Tasks.org on my phone (which supports dependent tasks), synchronizing to a Nextcloud server with the Tasks app (which supports dependent tasks now, but didn’t for a long time), which also syncs to Thunderbird (which does not appear to show dependent tasks as dependents).

Well that’s still good news that I didn’t expect! I suppose I will look into that then. Thank you!

ISO 8601 date format. Not because it’s a standard, but because it’s simple, sensible, clearly defined, and very effective.

Date fields in any order other than most-significant-digits-first is not only counterintuitive, but needlessly complicated to work with. Omitting critical information like the century is ambiguous and confusing.

We don’t live in isolated villages any more. Mixing and matching those problems by accepting all the world’s various regional and personal date styles, especially with no reliable indication of which ones apply in any given case, leads to the hodgepodge of error-prone date madness that we have today.

The 2024-09-02 format should be taught in schools and required in official documents. Let the antiquated date styles fall into disuse outside of art and personal correspondence, like cursive writing.

For the newbies: RFC 3339 vs ISO 8601. Bookmark this site.

That looks like an interesting diagram, but the text in it renders too small to read easily on the screen I’m using, and trying to open it leads to a javascript complaint and a redirect that activates before I can click to allow javascript. If it’s yours, you might want to look in to that.

The table below works, though. Thanks for the link.

7 digit years feels way to optimistic, but I’ll be rooting for us.

The year is the information that most of the time is the least significant in a date, in day to day use.

DDMMYY is perfect for daily usage.

DDMMYY is perfect for daily usage.

Except that DDMMYY has the huge ambiguity issue of people potentially interpreting it as MMDDYY. And it’s not straight sortable.

My team switched to using YYYY-MM-DD in all our inner communication and documents. The “daily date use” is not the issue you think it is.

Except that DDMMYY has the huge ambiguity issue of people potentially interpreting it as MMDDYY.

Yes and YYYY-MM-DD can potentially be interpreted as YYYY-DD-MM. So that is an zero argument.

I never said that the date format should never used, just that significants is a arbitrary value, what significant means depends on the context. If YYYY-MM-DD would be so great in everyday use then more or even most people would use it, because people, in general, tend to do things that make their life easier.

There is no superior date format, there are just date format that are better for specific use cases.

My team switched to using YYYY-MM-DD in all our inner communication and documents

That is great for your team, but I don’t think that your team has a size large enough to have any kind of statistically relevance at all. So it is a great example for a specific use case but not an argument for general use at all.

Yes and YYYY-MM-DD can potentially be interpreted as YYYY-DD-MM. So that is an zero argument.

No country uses “year day month” ordered dates as standard. "Month day year, " on the other, hand has huge use. It’s the conventions that cause the potential for ambiguity and confusion.

That is great for your team, but I don’t think that your team has a size large enough to have any kind of statistically relevance at all. So it is a great example for a specific use case but not an argument for general use at all.

Entire countries, like China, Japan, Korea, etc., use YYYY-MM-DD as their date standard already.

My point was that once you adjust, it actually isn’t painful to use as it first appears it could be, and has great advantages. I didn’t say there wasn’t an adjustment hurdle that many people would bawk at.

https://en.m.wikipedia.org/wiki/List_of_date_formats_by_country

Entire countries, like China, Japan, Korea, etc., use YYYY-MM-DD as their date standard already.

And every person in those countries uses YYYY-MM-DD always in their day to day communication? I really doubt that. I am sure even in those countries most people will still use short forms in different formats.

Yes, and their shorthand versions, like writing 9/4, have the same problem of being ambiguous.

You keep missing the point and moving the goal posts, so I’ll just politely exit here and wish you well. Peace.

I never moved the goalposts, all I always said was that a forced and clunky date format like YYYY-MM-DD will never find broad use or acceptance in the major population of the world. It is not made for easy day to day use.

If it sounded like I moved goalposts, that maybe due to english as a second language. Sorry for that.

But yes, I think we both have made our positions and statements clear, and there is not really a common ground for us. Not because one of us would be right or wrong but because we are not talking about the topic on the same level of abstraction. I talk about it from a social, very down to the ground perspective and you are at least 2 levels of abstraction above that. Nothing wrong with that but we just don’t see the same picture.

And yes using YYYY-MM-DD would be great, I don’t say anything against that on a general level, I just don’t ever see any chance for it used commonly.

So thank you for the great discussion and have a nice day.

Your day to day use isn’t everyone else’s. We use times for a lot more than “I wonder what day it is today.” When it comes to recording events, or planning future events, pretty much everyone needs to include the year. Getting things wrong by a single digit is presented exactly in order of significance in YYYY-MM-DD.

And no matter what, the first digit of a two-digit day or two-digit month is still more significant in a mathematical sense, even if you think that you’re more likely to need the day or the month. The 15th of May is only one digit off of the 5th of May, but that first digit in a DD/MM format is more significant in a mathematical sense and less likely to change on a day to day basis.

For any scheduled date it is irrelevant if you miss it for a day, a month or a year. So from that perspective every part of it is exactly the same, if the date is wrong then it is wrong. You say that it is sorted in the order of most significants, so for a date it is more significant if it happend 1024, 2024 or 9024? That may be relevant for historical or scientific purposes but not much people need that kind of precision. Most people use calendars for stuff days or month ahead or below, not years or decades.

If I get my tax bill, I don’t care for the year in the date because I know that the government wants the money this year not next or on ten. If I have a job interview, I don’t care for the year, the day and months is what is relevant. It has a reason why the year is often removed completely when dates are noted or made. Because it Is obvious.

Yes I can see why YYYY-MM-DD is nice for stuff like archiving purposes, it makes sorting and grouping very easy but there they already use the best system for the job.

For digital documents I would say that date and time information should be stored in a defined computer readable standard so that the document viewer can render or use it in any way needed. That could be swatch internet time as far as I care because hopefully I would never look at the raw data at all.

You say that it is sorted in the order of most significants, so for a date it is more significant if it happend 1024, 2024 or 9024?

Most significant to least significant digit has a strict mathematical definition, that you don’t seem to be following, and applies to all numbers, not just numerical representations of dates.

And most importantly, the YYYY-MM-DD format is extensible into hh:mm:as too, within the same schema, out to the level of precision appropriate for the context. I can identify a specific year when the month doesn’t matter, a specific month when the day doesn’t matter, a specific day when the hour doesn’t matter, and on down to minutes, seconds, and decimal portions of seconds to whatever precision I’d like.

Ok, then I am sure we will all be using that very soon, because abstract mathematic definitions always map perfectly onto real world usage and needs.

It is not that I don’t follow the mathematic definition of significance, it is just invalid for the view and scope of the argument that I make.

YYYY-MM-DD is great for official documents but not for common use. People will always trade precision for ease of use, and that will never change. And in most cases the year is not relevant at all so people will omit it. Other big issue: People tend to write like they talk and (as far as I know) nobody says the year first. That’s exactly why we have DD-MM and MM-DD

YYYY-MM-DD will only work in enforced environments like official documents or workspaces, because everywhere else people will use shortcuts. And even the best mathematic definition of the world will not change that.

I arrived to manage releases in a company, the previous manager named releases as “release04092016”, as USA standard. My first recommendation was to name releases as “releaseyyyymmdd” so “release20160409”. I was asked by another manager why to change that, so I showed her a sorted list of releases “git branches” and asked her, can you tell me there when was the last release? (a very common question) Of course, to find the last release you need to check the whole list because the mmddyyyy order is useless. The answer with yyyymmdd was immediate, just look at the last row.

And it can be sorted alphabetically in all software. That’s a pretty big advantage when handling files on a computer

Also, you can sort by ascending file names

I had the fortune of being hired to build up from zero my department, and one of the first “rules” I made was all dates are ISO-8601 and now every process runs with 8601, if you use anything different your code is going to fail eventually when it finds another column date in 8601.

RFC3339 is a simplified profile of 8601 that only covers YYYY-MM-DD style formatting, if you only ever use that format and avoid the things like “2024-W36” they’re mostly interchangeable.

I love this standard. If you dig deeper into it, the standard also covers a way to express intervals and periods. E.g. “P1Y2M10DT2H30M” represents one year, 2 months, 10 days, 2 hours and 30 mins.

I recall once using the standard when writing a cron-style scheduler.

I also like the POSIX “seconds since 1970” standard, but I feel that should only be used in RAM when performing operations (time differences in timers etc.). It irks me when it’s used for serialising to text/JSON/XML/CSV.

Also: Does Excel recognise a full ISO8601 timestamp yet?

I also like the POSIX “seconds since 1970” standard, but I feel that should only be used in RAM when performing operations (time differences in timers etc.). It irks me when it’s used for serialising to text/JSON/XML/CSV.

I’ve seen bugs where programmers tried to represent date in epoch time in seconds or milliseconds in json. So something like “pay date” would be presented by a timestamp, and would get off-by-one errors because whatever time library the programmer was using would do time zone conversions on a timestamp then truncate the date portion.

If the programmer used ISO 8601 style formatting, I don’t think they would have included the timepart and the bug could have been avoided.

Use dates when you need dates and timestamps when you need timestamps!

Thats an issue with the time library, not with timestamps. Actually timestamps are always in UTC, you need to do the conversion to your local time when displaying the value. There should be no possible off-by-one errors, unless you are doing something really wrong.

It’s completely bonkers that JPEG-XL is as good as it is and no one wants to actually implement it into web browsers

What’s so good about it?

- Existing JPEG files (which are the vast, vast majority of images currently on the web and in people’s own libraries/catalogs) can be losslessly compressed even further with zero loss of quality. This alone means that there’s benefits to adoption, if nothing else for archival and serving old stuff.

- JPEG XL encoding and decoding is much, much faster than pretty much any other format.

- The format works for both lossy and lossless compression, depending on the use case and need. Photographs can be encoded in a lossy way much more efficiently than JPEG and things like screenshots can be losslessly encoded more efficiently than PNG.

- The format anticipates being useful for both screen and prints. Webp, HEIF, and AVIF are all optimized for screen resolutions, and fail at truly high resolution uses appropriate for prints. The JPEG XL format isn’t ready to replace camera RAW files, but there’s room in the spec to accommodate that use case, too.

It’s great and should be adopted everywhere, to replace every raster format from JPEG photographs to animated GIFs (or the more modern live photos format with full color depth in moving pictures) to PNGs to scanned TIFFs with zero compression/loss.

- The format works for both lossy and lossless compression, depending on the use case and need. Photographs can be encoded in a lossy way much more efficiently than JPEG and things like screenshots can be losslessly encoded more efficiently than PNG.

Someone made a fair point that having a format being both lossy and lossless is not necessarily a great idea. If you download a jpeg file you know it will be compressed, if you download png it will be lossless. Shifting through jxl files to check if it’s lossy or not doesn’t sound very fun.

All in all I’m a big supporter of jxl though, it’s one of the only github repos I actively follow.

While I agree that it’s somewhat bad that there is no distinction between lossless and lossy jxl in the file extension, I think it’s really not a big deal compared to the present situation with jpg/png.

The reason being that if you download a png file you have no idea if its been converted from jpg, if it’s a screenshot of a jpg, or if it’s been subjected to lossy reencoding by a tool or a website upload process.

The only thing you can really do to try and see if the file you’ve downloaded has suffered encoding loss is to do an image search on it and see if there are any better quality versions out there. You’d do the exact same thing with a jxl file.

Functionally speaking, I don’t see this as a significant issue.

JPEG quality settings can run a pretty wide gamut, and obviously wouldn’t be immediately apparent without viewing the file and analyzing the metadata. But if we’re looking at metadata, JPEG XL reports that stuff, too.

Of course, the metadata might only report the most recent conversion, but that’s still a problem with all image formats, where conversion between GIF/PNG/JPG, or even edits to JPGs, would likely create lots of artifacts even if the last step happens to be lossless.

You’re right that we should ensure that the metadata does accurately describe whether an image has ever been encoded in a lossy manner, though. It’s especially important for things like medical scans where every pixel matters, and needs to be trusted as coming from the sensor rather than an artifact of the encoding process, to eliminate some types of error. That’s why I’m hopeful that a full JXL based workflow for those images will preserve the details when necessary, and give fewer opportunities for that type of silent/unknown loss of data to occur.

Existing JPEG files (which are the vast, vast majority of images currently on the web and in people’s own libraries/catalogs) can be losslessly compressed even further with zero loss of quality. This alone means that there’s benefits to adoption, if nothing else for archival and serving old stuff.

Funny thing is, there was talk on the Chrome bug tracker of using just this ability transparently at the HTTP layer (like gzip/brotli compression), but they’re so set on pushing their AVIF format that they backed away from it.

This is why I fucking love the internet.

I mean, I’ll never take the time to get this knowledgable about image formats, but I am ABSOLUTELY fuckdamn thrilled that at least SOMEONE out there takes it seriously.

Good on you, pixel king

Basically smaller file sizes than JPEG at the same quality and it also automatically loads a lower quality version of the image before it loads a higher quality version instead of loading it pixel by pixel like an image would normally load. Google refuses to implement this tech into Chrome because they have their own avif format, which isn’t bad but significantly outclassed by JPEG-XL in nearly every conceivable metric. Mozilla also isn’t putting JPEG-XL into Firefox for whatever reason. If you want more detail, here’s an eight minute video about it.

I’m under the impression that there’s two reasons we don’t have it in chromium yet:

- Google initially ignored jpeg-xl but then everyone jumped on it and now they feel they have to create a post-hoc justification for not supporting it earlier which is tricky and now they have a sunk cost situation to keep ignoring it

- Google today was burnt by the webp vulnerability which happened because there was only one decoder library and now they’re waiting for more jpeg-xl libraries which have optimizations (which rules out reference implementations), good support (which rules out libraries by single authors), have proven battle-hardening (which will only happen over time) and are written safely to avoid another webp style vulnerability.

Google already wrote the wuffs language which is specifically designed to handle formats in a fast and safe way but it looks like it only has one dedicated maintainer which means it’s still stuck on a bus factor of 1.

Honestly, Google or Microsoft should just make a team to work on a jpg-xl library in wuffs while adobe should make a team to work on a jpg-xl library in rust/zig.

That way everyone will be happy, we will have two solid implementations, and they’ll both be made focussing on their own features/extensions first so we’ll all have a choice among libraries for different needs (e.g. browser lib focusing on fast decode, creative suite lib for optimised encode).

didn’t google include jpeg-xl support already in developer versions of chromium, just to remove it later?

Chromium had it behind a flag for a while, but if there were security or serious enough performance concerns then it would make sense to remove it and wait for the jpeg-xl encoder/decoder situation to change.

It baffles me that someone large enough hasn’t gone out of their way to make a decoder for chromium.

The video streaming services have done a lot of work to switch users to better formats to reduce their own costs.

If a CDN doesn’t add it to chromium within the next 3 years, I’ll be seriously questioning their judgement.

Adobe is backing the format, Apple support is coming along, and there are rumors that Apple is switching from HEIC to JPEG XL as a capture format as early as the iPhone 16 coming out in a few weeks. As soon as we have a full blown workflow that can take images from camera to post processing to publishing in JXL, we might see a pretty strong push for adoption at the user side (browsers, websites, chat programs, social media apps and sites, etc.).

Do you know QOI format ? I would appreciate your opinion about it.

QOI is just a format that’s easy for a programmer to get their head around.

It’s not designed for everyday use and hardware optimization like jpeg-xl is.

You’re most likely to see QOI in homebrewed game engines.

To be honest, no. I mainly know about JPEG XL only because I’m acutely aware of the limitations of standard JPEG for both photography and high resolution scanned documents, where noise and real world messiness cause all sorts of problems. Something like QOI seems ideal for synthetic images, which I don’t work with a lot, and wouldn’t know the limitations of PNG as well.

I think I would feel better about using JPEG-XL where I currently use WebP. Here’s hoping for wider support.

Zigbee or really any Bluetooth alternative.

Bluetooth is a poorly engineered protocol. It jumps around the spectrum while transmitting, which makes it difficult and power intensive for bluetooth receivers to track.

I agree Bluetooth (at least Bluetooth Classic) is not very well designed, but not because of frequency hopping. That improves robustness and I don’t see why it would cost any more power. The hopping pattern is deterministic. Receivers know in advance which frequency to hop to.

IRC.

Jabber.

IPFS.

I also pick this guy’s IRC

PGP or GPG, however you spell it. You can encrypt stuff, protect your email from prying eyes!

Also FOSS in general.

The tooling around it needs to be brought up to snuff. It seems like it hasn’t evolved much in the last 20+ years.

I had a small team make an attempt to use it at work. Our conclusion was that it was too clunky. Email plugins would fool you into thinking it was encrypted when it wasn’t. When it did encrypt, the result wasn’t consistently readable by plugins on the receiving end. The most consistent method was to write a plaintext doc, encrypt it, and attach the encrypted version to the email. Also, key servers are setup by amateurs who maintain them in their spare time, and aren’t very reliable.

One of the more useful things we could do is have developers sign their git commits. GitHub can verify the signature using a similar setup to SSH keys.

It’s also possible to use TLS in a web of trust way, but the tooling around it doesn’t make it easy.

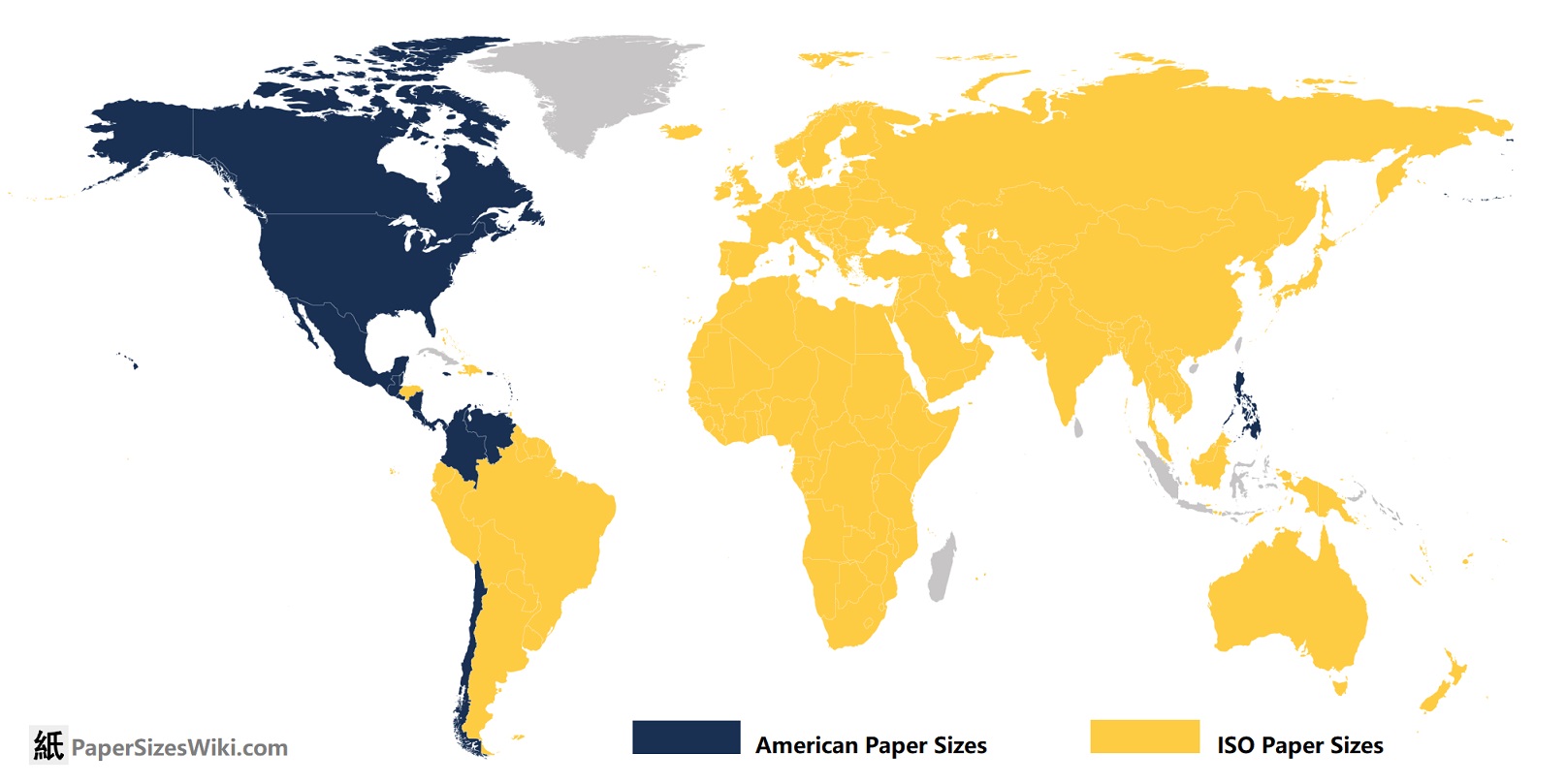

ISO 216 paper sizes work like this: https://www.printed.com/blog/paper-size-guide/

It’s so fucking neat and intuitive! How is it not used more???

sorry to tell you this bud…

It’s also worth noting that switching from ANSI to ISO 216 paper would not be a substantial physical undertaking, as the short-side of even-numbered ISO 216 paper (eg A2, A4, A6, etc) is narrower than for ANSI eqjivalents. And for the odd-numbered sizes, I’ve seen Tabloid-size printers in America which generously accommodate A3.

For comparison, the standard “Letter” paper size (aka ANSI A) is 8.5 inches by 11 inches. (note: I’m sticking with American units because I hope Americans read this). Whereas the similar A4 paper size is 8.3 inches by 11.7 inches. Unless you have the rare, oddball printer which takes paper long-edge first, this means all domestic and small-business printers could start printing A4 today.

In fact, for businesses with an excess stock of company-labeled #10 envelopes – a common size of envelope, measuring 4.125 inches by 9.5 inches – a sheet of A4 folded into thirds will still (just barely) fit. Although this would require precision folding, that’s no problem for automated letter mailing systems. Note that the common #9 envelope (3.875 inches by 8.875 inches) used for return envelopes will not fit an A4 sheet folded in thirds. It would be advisable to switch entirely to A series paper and C series envelopes at the same time.

Confusingly, North America has an A-series of envelopes, which bear no relation to the ISO 216 paper series. Fortunately, the overlap is only for the less-common A2, A6, and A7.

TL;DR: bring reams of A4 to the USA and we can use it. And Tabloid-size printers often accept A3.

My printer will print and scan any A side paper. But I can’t even buy A paper! Fucking America

Clearly the rest of the world are communists! It’s not us, it’s you! I’m not crying you’re crying! 😭😭😭

Presumably you could just buy that paper size? They’re pretty similar sizes; printers all support both sizes. I’ve never had an issue printing a US Letter sized PDF (which I assume I have done).

Kind of weird that you guys stick to US Letter when switching would be zero effort. I guess to be fair there aren’t really any practical benefits either.

I’ve literally never even seen A paper in America. Probably would have to special order it from another country

Ah fair enough.

I mean I’d love to use it. Of course America is behind the times of civilized nations.

Also, A4 simply has a better ratio than letter. Letter is too wide, making A4 better to hold and it fits more lines per page.

I wish standards were always open access. Not behind a 600 dollar paywall.

When it is paywalled I’m irritated it’s even called a standard.

DP >> HDMI

The term open-standard does not cut it. People should start using “publicly available and sharable” instead (maybe there is a better name for it).

ISO standards for example are technically “open”. But how relevant is that to a curious individual developer when anything you need to implement would require access to multiple “open” standards, each coming with a (monetary) price, with some extra shenanigans [archived] on top.

IEEE standards however are actually truly open, as in publicly available and sharable.

how about FOSS, free and open-source standards /s

why do we call standards open when they require people to pay for access to the documents? to me that does not sound open at all

Because non-open ones are not available, even for a price. Unless you buy something bigger than the “standard” itself of course, like a company that is responsible for it or having access to it.

There is also the process of standardization itself, with committees, working groups, public proposals, …etc involved.

Anyway, we can’t backtrack on calling ISO standards and their likes “open” on the global level, hence my suggestion to use more precise language (“publicly available and sharable”) when talking about truly open standards.

It’s a historical quirk of the industry. This stuff came around before Open Source Software and the OSI definition was ever a thing.

10BASE5 ethernet was an open standard from the IEEE. If you were implementing it, you were almost certainly an engineer at a hardware manufacturing company that made NICs or hubs or something. If it was $1,000 to purchase the standard, that’s OK, your company buys that as the cost of entering the market. This stuff was well out of reach of amateurs at the time, anyway.

It wasn’t like, say, DECnet, which began as a DEC project for use only in their own systems (but later did open up).

And then you have things like “The Open Group”, which controls X11 and the Unix trademark. They are not particularly open by today’s standards, but they were at the time.

IPv6. Stop engineering IoT junk on single-stack IPv4, you dipshits.

Ogg Opus. It’s superior to everything in every way. It’s free and there is absolutely no reason to not support it.

IPv6. Stop engineering IoT junk on single-stack IPv4, you dipshits.

Amen

It blows my mind that MPEG 1.0 Layer III is still so dominant.

Count the number of devices in use today that will never support Opus, and it might not blow your mind any longer. Also, AFAIK, the reference implementation still doesn’t implement full functionality on hardware that lacks a floating point unit.

These things take time.

I remember using Xiph’s integer implementation of Ogg Vorbis on my Nokia N-Gage (Symbian S60). I wonder if it’s not a priority for Opus. IIRC, Opus is floats all the way down.

I remember trying to understand Vorbis fixed point codebase, it was completely bonkers, the three of us on this task couldn’t even draw a rough control flow diagram.

Out of curiosity, why ogg as opposed to other containers? What advantages does it have?

Definitely agree on the Opus part, but I am very ignorant on the ogg container.

Large ISPs still don’t support it. It’s a fucking travesty.

JSON5. it’s basically just JSON with several QoL improvements, like comments, that make it usable as a format for human consumption (as opposed to a serialization format).

Objects may have a single trailing comma.

I just came.

I love that there’s someone out there who’s that passionate about JSON.

I hate grammers in anything that don’t support trailing commas. It’s even worse when it’s supported in some contexts and not others. Like lists are OK, but not function parameters.

TIL this exists

I like the Doxygen’s implementation and extension of Markdown. Pair it with PlantUML and you have something worth being a standard.

Unicode editors for notes/todo formats, making markup unnecessary.

Does unicode have bold/italics/underline/headings/tables/…etc.? Isn’t that outside of its intended goal? If not, how is markup unnecessary?

Does unicode have bold/italics/underline/headings/tables/

Yes, and even 𝓈𝓉𝓊𝒻𝒻 𝕝𝕚𝕜𝕖 🅣🅷🅘🆂. And table lines & edges & co. are even already in ASCII.

Isn’t that outside of its intended goal?

🤷<- this emoji has at least 6 color variants and 3 genders.

If not, how is markup unnecessary?

Because the editor could place a 𝗯𝗼𝗹𝗱 instead of a **bold**, which is a best-case-scenario with markdown support btw. And i just had to escspe the stars, which is a problem that native unicode doesn’t pose.

What about people who prefer to type

**bold**rather than type a word, highlight it, and find the Bold option in whichever textbox editor they happen to be using?Which is what i ask for, better (or at all) support for unicode character variations, including soft keyboards.

Imagine, there was a switch for bold, cursive, etc on your phone keyboard, why would you want to type markup?

And nobody would take**bold**away, if you want to write that.I don’t like that approach. Text search won’t find all the different possible Unicode representations.

You always find some excuses, huh? That would be a bug.

Always? That’s my first reply. Bug of what? A flaired character has a different code than a standard one, so your files would be incompatible with any established tools like find or grep.

Would you have to do that for every letter? I suppose a “bold-on/bold-off” character combination would be better/easier, and then you could combine multiple styles without multiplying the number of glyphs by some ridiculous number.

Anyways, because markup is already standardized, mostly. Having both unicode and markup would be a nightmare. More complicated markup (bulleted lists, tables) is simpler than it would be in Unicode. And markup is outside of Unicode’s intended purpose, which is to have a collection of every glyph. Styling is separate from glyphs, and has been for a long time, for good reason. Fonts, bold/italics/underline/strikethrough, color, tables and lists, headings, font size, etc. are simply not something Unicode is designed to handle.

https://cuelang.org/. I deal with a lot of k8s at work, and I’ve grown to hate YAML for complex configuration. The extra guardrails that Cue provides are hugely helpful for large projects.

Oh, this looks great!

I’ve been struggling between customize and helm. Neither seem to make k8s easier to work with.I have to try cuelang now. Something sensible without significant whitespace that confuses editors, variables without templating.

I’ll have to see how it holds up with my projectsHmm, what alternative? XML :-)? People hate Grade DSL just for not being xml

Oh this! YAML was a terrible choice. And that’s coming from someone who likes Python and prefers white spaces over brackets. YAML never clicked for me.

What you mean you can’t easily tell what this is?

- foo: - - : - bar: baz: [ - - ]