Is there a way to access engagement stats for a community to make a (daily) time series? Something along the lines of exporting data ever 24 hours at 2:00 UTC.

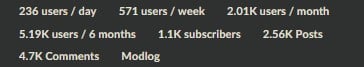

This is the data I am referring to:

Perhaps an API of some sort. A formal method that goes beyond writing a scrapper.

Community statistics are available from the Lemmy API. For example, this URL returns the info for this community: https://lemm.ee/api/v3/community?name=fedigrow

The community statistics are listed in the returned data under

counts:.The API only returns current numbers, though. To create a time series you need to write a script to grab the community statistics on a regular basis and store the results.

Thanks! This seems like the best area for further research.

Any chance that historical statistics like these could be incorporated into lemmyverse.net?

Funny you say that, I literally made a branch last night looking to add more graphs and stats 😁 I wanted to add some more pretty graphs and improve the inspector page a bit.

It’ll probably take a little while but I’ll see what I can do 👍👏

Edit, this kinda exists already… https://lemmyverse.net/instance/sh.itjust.works

Funny you say that, I literally made a branch last night looking to add more graphs and stats 😁

Ha! What a coincidence! It would be great if we could see graphs of the growth of individual communities over time.

It’ll probably take a little while but I’ll see what I can do 👍👏

You’re awesome!

you could also use the communities export from lemmyverse data dumps. I’d have to add some more historical metrics into redis, for communities. I already keep historical metrics, but just don’t publish them.

Will need to check out lemmyverse and see what data is available.

Seems like a simple script doing an API query every 24-hours seems to be the best option, but I would rather not deal with maintaining such a script/solution. :)

that’s pretty much what I do, it crawls through all the Lemmy sites (and Mbin) and data is updated each 6 hours. I’ve been thinking about storing a longer history of data so I could add some more graphs and stuff.

also I will probably publish the raw redis dump, which might allow for some more analytics based on it.

Please do publish the raw data, even if it is not visualised. Would be interesting to trawl through history even I do end up writing a basic API query service.

IDK if this counts as “writing a scraper,” but from

tmuxyou could:echo '"Daily Users","Weekly Users","Monthly Users","6-Month Users","Community ID","Subscribers","Posts","Comments"' > stats.csv while true; do curl "https://YOUR_INSTANCE/api/v3/community?name=COMMUNITY_NAME" \ | jq -r '[.community_view.counts | (.users_active_day, .users_active_week, .users_active_month, .users_active_half_year, .community_id, .subscribers, .posts, .comments)] | @csv' >> stats.csv sleep 24h doneEdit: No auth needed, it’s simple

That request doesnt need any auth.

Oop, you’re right, fixed.