Write everything in Rust, rustc will tell you what’s wrong.

There are absolutely people that believe if you tell ChatGPT not to make mistakes that the output is more accurate 😩… it’s things like this where I kinda hate what Apple and Steve Jobs did by making tech more accessible to the masses

Well, you can get it to output better math by telling it to take a breathe first. It’s stupid but LLMS got trained on human data, so it’s only fair that it mimics human output

breathe

Not to be rude, this is only an observation as an ESL. Just yesterday, someone wrote “I can’t breath”. Are these two spellings switching places now? I’m seeing it more often.

No, it’s just a very common mistake. You’re right, it’s supposed to be the other way around (“breath” is the noun, “breathe” is the verb.)

English spelling is confusing for native speakers, too.

Whilst I’ve avoided LLMs mostly so far, seems like that should actually work a bit. LLMs are imitating us, and if you warn a human to be extra careful they will try to be more careful (usually), so an llm should have internalised that behaviour. That doesn’t mean they’ll be much more accurate though. Maybe they’d be less likely to output humanlike mistakes on purpose? Wouldn’t help much with llm-like mistakes that they’re making all on their own though.

You are absolutely correct and 10 seconds of Google searching will show that this is the case.

You get a small boost by asking it to be careful or telling it that it’s an expert in the subject matter. on the “thinking” models they can even chain together post review steps.

attached a debugger to the LLM

interpret the input

This reads like someone who has heard of these general concepts but doesn’t understand them.

But then again, I just imagined trying to be 100% accurate while still being concise, and I don’t think it’s possible.

It’s also not really clear what the dynamic is supposed to be here. Is the LLM supposed to be invoking the generated code through a separate entry point like a test suite, or is the developer launching the built app with a debugger attached and feeding a prompt to the LLM whenever an exception is thrown?

Neither one of those would really be “attaching a debugger to the LLM” though, and in either case it would be interpreting the output not the input.

So, as usual this might be a translation issue, but I wasn’t aware that an attachment has a direction. The debugger would obviously feed its output to the llm in this scenario, so the two things are attached to each other in the sense of the word that I got from a dictionary. As the debugger gives a filename as well, feeding the files contents as well, asking for improvements and overwriting the original file would be trivial, so automating it should be easy enough. Attachment was meant here only in the sense of “well, they’re connected and data goes from a to b”.

Would such a setup make sense? Not really. But you know, that’s why it’s a four-panel-comic and not some overrated ai startup. Or maybe it is, it seems like making sense isn’t really a requirement for tech startups anymore.

Ah. Definitely a translation issue. I didn’t realize there was a translation involved. Or that you were the author. I wouldn’t have been so critical otherwise. You’re doing great.

“Attachment” in general doesn’t have a direction, but in the context of “attach debugger”, it does, because the target of the attachment is the process you want to inspect. In this case, the process is the code you’re writing, not the LLM helping you write it.

No worries, and please remain critical.

So, yeah, my mother tongue is German, so the English texts may be a little bumpy here or there. I feel confident enough with my English but that doesn’t change the fact that it’s a secondary language for me and this is not really a professional project where I could pay some natively English speaking nerd to fix my mistakes.

That said I’m aware how debuggers attach to the processes they’re analysing, I just wasn’t aware that this would turn the word exclusive if used in this context. Thanks for bringing it up though! Learned something!

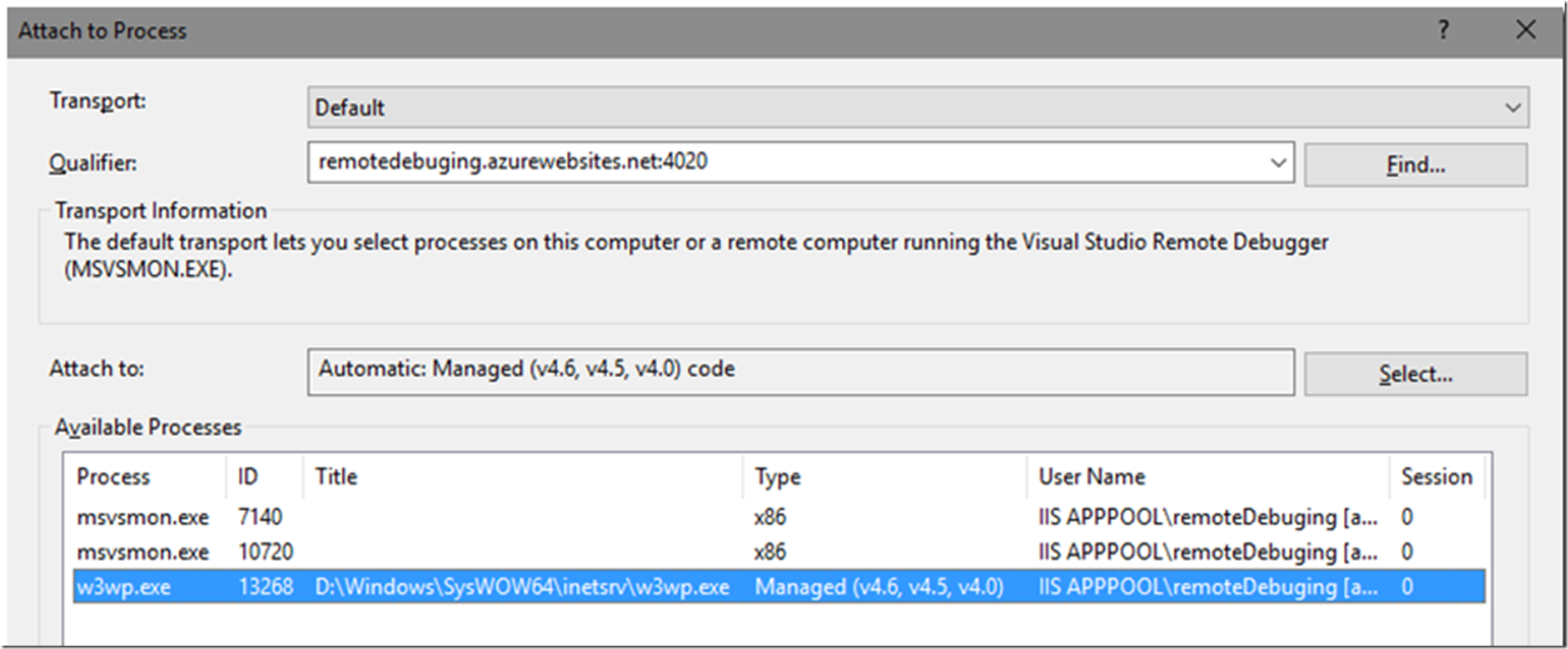

Yeah, that’s the technical lingo meaning. Attach a debugger means that you have a running process without a debugger running with it, and then you attach the debugger to it to get debug output from that process.

But I still don’t quite get the intended process.

So you run the code the LLM outputs with a debugger and let the LLM interact with the debugger? Not really sure if that helps, because for the LLM would need to know how to operate the debugger and would need to understand what problems it should be looking for.

Current systems (e.g. Github Copilot) combine the LLM with static code analysis and compiler outputs to find and fix errors. They can also execute the code and run tests and compare outputs with expected outputs.

Current systems (e.g. Github Copilot) combine the LLM with static code analysis and compiler outputs to find and fix errors. They can also execute the code and run tests and compare outputs with expected outputs.

Am I misunderstanding something or does this sound like programmers making themselves unnecessary?

Only the very junior ones who are writing code without understanding why they’ve been asked to write it. Anyone with more than about 18 months experience will be able to start deciding what to actually build, and I haven’t seen LLMs be particularly helpful with that yet.

deleted by creator

That is so dark.

C is easy ! Just don’t make any mistake :)

Syntax errors != Logic errors