My old sandisk SATA ssd was starting to get really slow for some reason. The SMART data and the sandisk SSD dashboard app were saying the SSD was healthy, but its performance wasn’t anywhere near what it was when brand new.

When benchmarked, it was all over the place with looong access times:

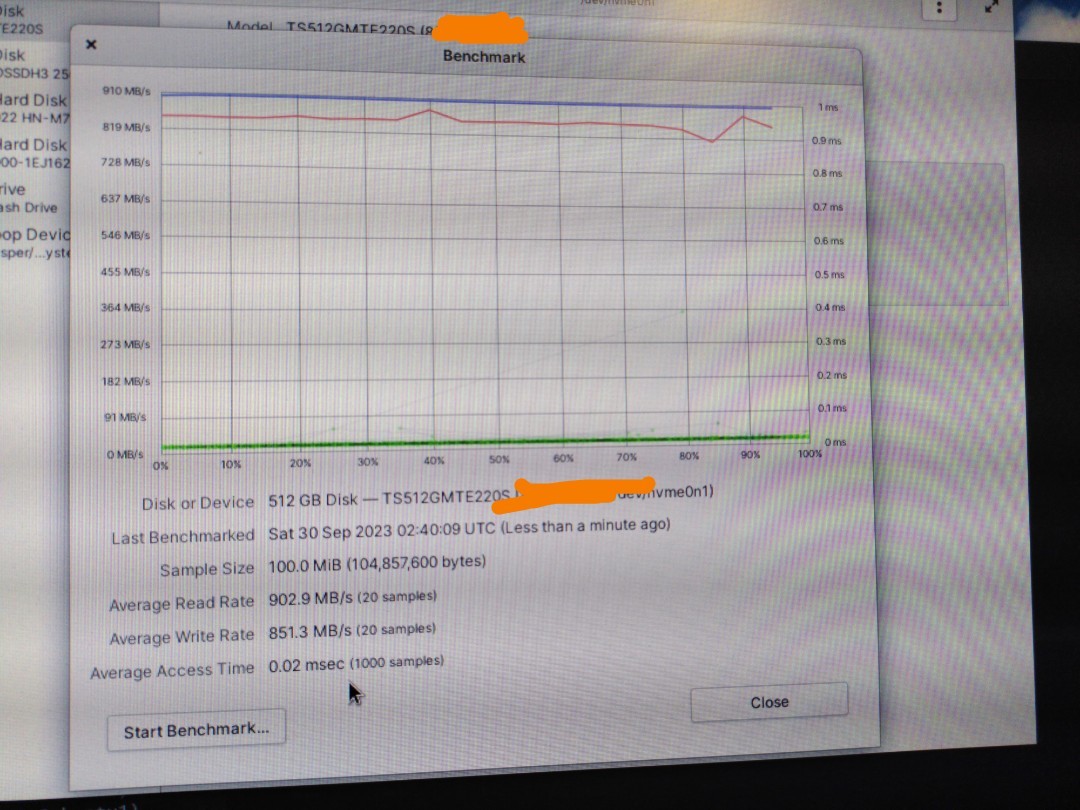

Sooo I decided to take the opportunity to upgrade the SSD to something faster - ended up grabbing a Transcend 512GB drive, with onboard DRAM

There were two problems though:

- My motherboard doesn’t support NVMe (at least officially)

- My only available PCIe slot is an x1/single lane

After researching, I realised that the single PCIe lane would still give me almost 1GB/s in real world usage - even though its far from the 3GB/s the drive is rated for, it’s double the speed of SATA and it’s worlds apart from my Sandisk ssd lol.

Ordered an NVMe to PCIe adapter, and proceeded to chop up my PCIe slot to make it fit:

PCMR NSFW

It took a while since I don’t own a dremel 🤪

Once that was done, I kapton taped up the exposed metal bits on the NVMe adapter, that could short on a mobo heatsink nearby.

In it goes!! (The GPU went in after the pic lol)

After re running the benchmarks, OMG the speed difference is insane, although it’s limited by that single PCIe lane.

I was caught off guard by something else though. After cloning my existing install to the new NVMe SSD, it booted right up, with the original Sandisk drive gone. My BIOS does not even recognise the NVMe drive as a disk drive, and there are no settings anywhere in there for it.

BIOS person, thank you whoever you are, you saved me needing to do more jank to get my unsupported NVMe drive working!

I am more than happy so far with the dramatic speed increase compared to the SATA drive. I can now actually shut down my desktop when I’m not using it 🥲

TIL you can install PCI cards by just…taping up extraneous pins??? And they work that way??? Cooooool.

PCIe is extremely flexible. Often times those big 16x slots are actually only wired for 8x or even 4x. The device has to be able to support running on fewer lanes than expected because of stuff like this.

Well, its basically running at PCIe 1x. Thats why theres all those notches on the full 16x card. You can install in a 1x, 2x, or 4x header, and it will work. Problem is some cards dont have the notches and need the full connector, but supposedly you can grind off some plastic from the port to make it fit and it will still work.

supposedly you can grind off some plastic from the port to make it fit and it will still work.

You absolutely can do this, often slots for 1x or 4x slots come with open backs for this reason - it’s better than only wiring up that many lanes in a 16x slot IMO!

What gets me is the number of people who take a saw to a pci-e card instead of just opening the back of the slot up… :(

Also this is PCIe, not PCI. It’s just got a few gaps because it’s actually only x4, and the other pins are just there for stability.

You don’t even need to tape them up. The slot is open ended explicitly for this kind of situation. The extra contacts just dangle and have no power running through them since they aren’t connected.

Ive never actually seen an open-ended slot though, and OP had to use a Dremel to open his. Probably for the exact reason that OP added the kapton tape, because it would be stupidly easy to short out those exposed pins.

Oh? Weird I thought it was standard. Looking at the PCs in my house it seems I was very wrong, none of them have an open ended 1x.

Maybe it’s the mandela effect.

Some of my server boards do, so they do exist, but I haven’t seen it much on consumer stuff.

deleted by creator

Yeah, this is where I ended up as well. I could have sworn I currently owned a few boards with it, but of the 7 I checked none of them had it.

deleted by creator

I’ve seen some, but they’re less common now and you’d only see 1 or 2 on a board.

Now they mostly out larger slots but don’t connect the pins.

I’m confused, why didn’t you get a 1x pcie carrier board?

Price mainly, and I wanted something that wouldn’t be constrained by a 1x lane in another mobo in future

How much space was left on the original 256GB disk? A common theme i heard about solid state drives was that speed will suffer the more space is used, so idealy you should keep it ~50% free for optimal performance.

The original ssd was rammed full, only about 20GB free. Writing files was super fast - it was mainly reading files that the SSD struggled to do at a palatable speed, often locking up my pc for a few seconds

With the new SSD i’ve just left the remaining space unallocated, so it’s firmware can freely use that space for whatever it needs to 😁

deleted by creator

Nice job! This is the type of stuff I come here for.

the fact that it fires up without BIOS recognition is cool and weird… that moment it boots after that kind of migration upgrade is like nothing else…

and i gotta say awesome mod dude… that was one cool ass solution with available hardware…

Aren’t you still bound by the limits of the SATA standard? So while the NVMe might have cheaper storage, the speed is bottle necked.

Is NVMe really cheaper? I thought SATA was the cheaper one.

NVME has been cheaper for a while now.

handy website for comparing disk prices

Cutting the back of x4/x8 slots is pretty common. Some motherboards even have open back slots as default.

Great work, OP. A very nice and well thought out upgrade (most folk skip the kapton tape).It’s worth everyone knowing that pcie slots are generally universally compatible. So a GPU (wanting 16x slot) can run in a 1x physical slot (infact this is how GPU mining expansion rigs work).

I don’t think I’ve ever encountered a pcie card that will not function at all unless it receives it’s required lanes.I don’t think I’ve ever encountered a pcie card that will not function at all unless it receives it’s required lanes

One of the few things that’d be problematic would be the x16 -> quad M.2 cards which use PCIe bifurcation.

Lanes 1-4 from the socket are wired to the first M.2, 5-8 to the 2nd, etc.It would still work (by some definition of the word), but in the sense that the first M.2 drive would get 1 lane and any others wouldn’t be connected.

(Quad M.2 boards with a “PLX” or other PCIe switch chip would work fine with 1 upstream lane serving all 4 drives)

This is cursed and I love it!

…so that’s what the PCIe x1 slot for, huh?

Lol. You can shove all sorts of crap in those.

Ive got a few 1x cards. For example, my old home server box has a 1x slot that you can plug in a 1x gpu, or additional networking card, USB, etc.

Had the being slow issue as well with my sandisk. Close enough before failure it seems.

But I had a backup!

I had a (slightly old) image backup just in case, thankfully didn’t need to use it though!

Props to you, awesome job.

UNLESS you are limited by budget, (which of course is a perfectly understandable reason) 👍

It makes zero sense to me as to why would you do all that instead of just getting a motherboard with a M.2 slot and putting the SSD there.

It makes no sense to you why someone would reuse what they have rather than buy something new?

Because it doesn’t look very safe from an electrical standpoint.